AI You Can Talk To

In a world increasingly driven by intelligent machines, the integration of large language models (LLMs) with physical hardware systems is shaping a new technological frontier. This powerful combination enables users to control devices through natural language, making interaction with machines more intuitive, efficient, and human-like. As we enter this era, the ability to simply speak and have machines understand, respond, and act is becoming more than a convenience it's becoming the foundation of a smarter, more connected future.

What Are Language Models and Why Do They Matter?

Language models like GPT-4 or Claude are AI systems trained on massive datasets of text to understand and generate human language. They excel at processing complex instructions, carrying on natural conversations, and even reasoning through multi-step problems. When integrated into hardware robots, smart home devices, wearables, industrial tools—they serve as a cognitive layer, translating human intentions into machine actions.

Instead of learning complicated control interfaces or programming devices manually, users can now speak their desires:

Turn off the lights in the living room, Move the robotic arm two inches to the left, or Set the temperature to 22 degrees.

The language model interprets these commands, understands context, and triggers the necessary hardware response.

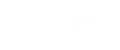

How Language Models Interact with Hardware

The fusion of LLMs and hardware typically involves several layers:

- Voice Interface (e.g., microphone input processed via speech recognition)

- Language Understanding (powered by models like GPT or Whisper)

- Command Mapping & Control Logic (translating natural language into machine-level instructions)

- Hardware Execution (the physical device takes action)

This architecture allows for multimodal interactions. A user can combine speech, gestures, and visual input, and the system can respond intelligently based on the full context something previously limited to sci-fi.

Applications Across Industries

- Smart Homes: Voice-activated assistants are evolving into true conversational companions. With deeper understanding, LLMs can now manage entire home environments based on nuanced requests or preferences.

- Healthcare: Surgeons may one day verbally control surgical robots mid-operation. Elderly patients can manage health monitoring systems through natural conversation.

- Manufacturing and Robotics: Workers can guide robots using spoken instructions, speeding up workflows without the need for technical training.

- Automotive: In-car systems enhanced with LLMs provide more helpful, human-like interactions like adjusting navigation, temperature, or media with natural dialogue.

Challenges and Considerations

Despite the promise, integrating language models with hardware brings challenges:

- Latency: Real-time responsiveness is essential, especially in physical environments.

- Safety: Misinterpreted commands could have serious consequences in healthcare or industrial settings.

- Privacy: Voice and usage data must be secured, especially as systems become more personalized.

- Edge Deployment: Running LLMs on-device (instead of in the cloud) is vital for speed and data control, but remains technically demanding.

The Road Ahead

Advancements in Edge AI, low-power chips, and model compression are making it possible to deploy language models directly into devices. This means faster responses, better privacy, and more autonomy. Soon, appliances, robots, and everyday tools will not only listen but understand and act intelligently.

Conclusion

The integration of language models with hardware is more than a convenience it's a fundamental shift in how humans interact with machines. As the line between software and physical systems blurs, we are entering a future where control is as simple and natural as speaking.