Generative Language Models as a Cognitive Layer for Humanoid Robots

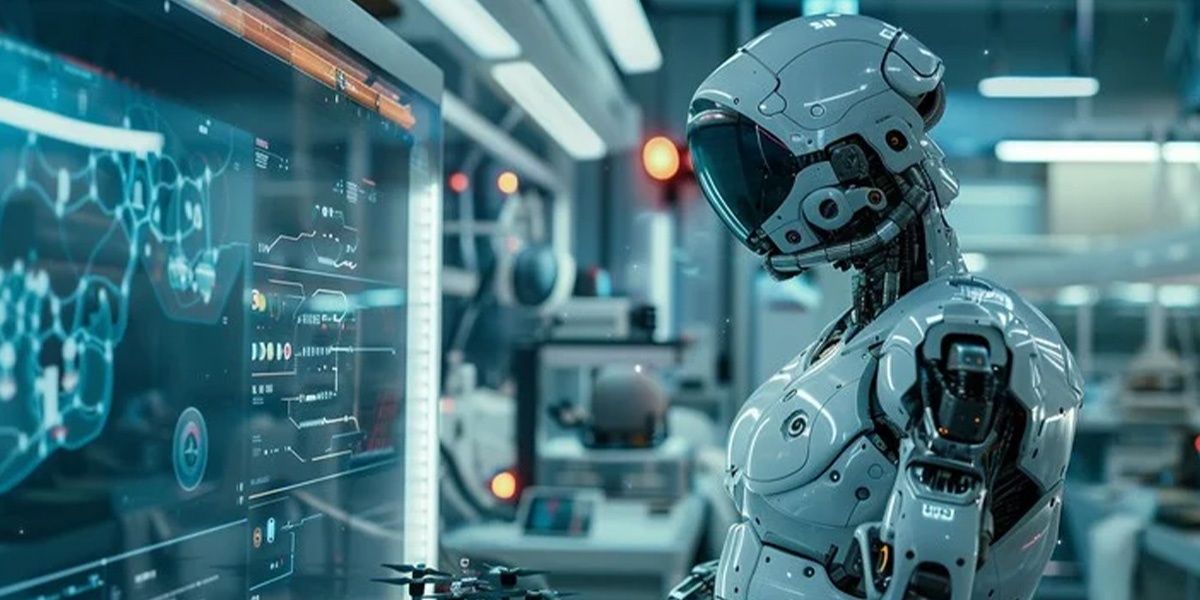

In recent years, the combination of humanoid robots and generative language models (LLMs) has opened new horizons for human-machine interaction. As robots become more physically capable, the missing piece for natural communication has long been the lack of real understanding and expression. Now, with the integration of advanced LLMs like GPT-4o, Claude, and Gemini, humanoid robots are becoming more intuitive, context-aware, and human-like in their responses and actions.

Natural Language Understanding

Traditional robots often relied on pre-programmed commands and rigid syntax. Generative language models, however, bring contextual understanding and semantic depth. They can interpret ambiguous phrases, adjust to different tones, and even understand sarcasm or emotion. For humanoid robots, this means they no longer respond mechanically but rather converse naturally, adapting their responses to the user's mood, intention, or environment.

For instance, when a user says, "I'm feeling a bit cold," a robot powered by an LLM can interpret this as a potential action request—perhaps to close a window or adjust the thermostat—without needing an explicit command. This level of interpretation was unthinkable a few years ago.

Multi-Modal Interaction

Models like GPT-4o are not just text-based—they are multi-modal, meaning they can process and generate responses from voice, image, and even video inputs. This is a game-changer for robots equipped with cameras and microphones. It allows them to see and hear like humans do, while also understanding language with a human-level grasp of nuance.

Imagine a robot assistant in a home or hospital setting. If it sees a patient lying uncomfortably or hears distress in someone’s voice, it can respond with empathy and take appropriate action. This brings AI-powered robotics closer to true emotional intelligence, which is essential for trust and acceptance in human environments.

Embodied AI: Thinking and Acting Together

The real power emerges when LLMs are paired with physical embodiments—arms, legs, sensors, and cameras. This concept, known as Embodied AI, allows robots to reason through complex instructions and translate them into physical action. If someone tells a robot, "Bring me the red book from the table next to the lamp," the language model breaks down the task, identifies the correct object using visual input, navigates the space, and performs the action.

Companies like Figure, Tesla, and Sanctuary AI are already working on humanoid robots that integrate such capabilities. The fusion of language understanding and motor control is no longer theoretical—it’s operational.

The Future

As generative models become faster, smaller, and more efficient, we will see even more powerful on-device AI in humanoid robots. These robots will not just execute commands—they will collaborate, learn, and even negotiate with humans. The line between machine and intelligent companion is quickly blurring.

In conclusion, generative language models are transforming humanoid robots from mechanical tools into intelligent, social partners. Through better language understanding, multi-modal perception, and embodied reasoning, the future of human-robot interaction looks more human than ever.