Nvidia A100 40GB GPU

Article Content:

-Introduction to the Nvidia A100 40GB GPU

- Key Features and Specifications

- Nvidia A100 Models and Their Differences

-Nvidia A100 40GB SXM vs A100 40GB PCIe: A Detailed Comparison

- Applications and Use Cases Nvidia A100 40GB

- Compatibility and Integration

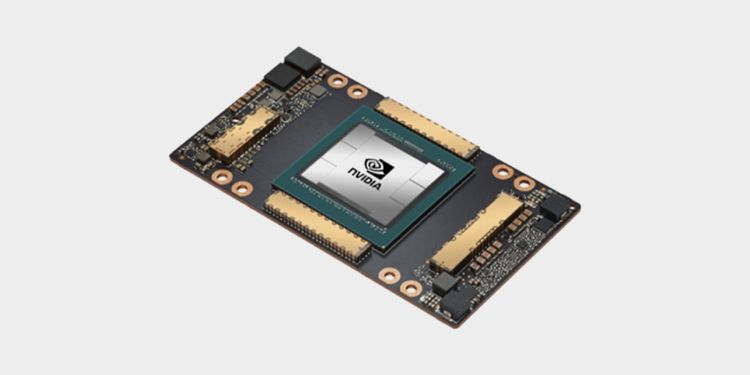

The Nvidia A100 40GB GPU is a high-performance computing powerhouse designed for diverse applications in artificial intelligence, machine learning, and data analysis. With 40GB of high-bandwidth HBM2 memory and a memory bandwidth of 1.6 TB/s, it delivers exceptional processing power suitable for handling complex computational tasks and large datasets. The A100 40GB model offers robust tensor operations, including 624 TOPS for INT8 and 312 TFLOPS for FP16, making it ideal for medium to large-scale AI workloads. While it may not match the higher memory capacity of the 80GB variant, it provides a cost-effective solution for many demanding applications, striking a balance between performance and affordability.

- Introduction to the Nvidia A100 40GB GPU

The Nvidia A100 40GB GPU is a high-performance computing powerhouse designed to handle demanding computational tasks. As part of Nvidia's Ampere architecture, it offers a balance between computational capability and memory capacity, making it an ideal choice for various advanced computing applications. This variant of the A100 is optimized for applications that require substantial parallel processing power but do not need the extensive memory capacity of its 80GB counterpart.

- Key Features and Specifications

- CUDA Cores and Clock Speed:

- CUDA Cores: 6,912

- Boost Clock Speed: 1,410 MHz

The A100 40GB maintains the same CUDA core count as its 80GB sibling, ensuring robust performance in parallel processing tasks. However, its boost clock speed is slightly lower compared to some other high-end GPUs, balancing power consumption and performance.

- Memory and Bandwidth:

- Memory: 40GB HBM2

- Memory Bandwidth: 1.6 TB/s

- Memory Interface: 5,120-bit

The 40GB model offers ample memory and bandwidth, though less than the 80GB version. It provides sufficient capacity for a wide range of AI, data science, and HPC applications.

- Tensor Cores and Processing Power:

- Tensor Operations:

- INT8: 624 TOPS

- FP16: 312 TFLOPS

- TF32: 156 TFLOPS

The A100 40GB delivers impressive performance in tensor operations, crucial for AI and deep learning tasks. Its tensor cores facilitate efficient processing of high-dimensional data, enhancing training and inference times for machine learning models.

- Interconnect and Scalability:

- NVLink: 12 links providing up to 600 GB/s

- Interconnect Bandwidth: Enhanced connectivity options support high-speed data transfer between GPUs, allowing for effective scaling in multi-GPU setups.

- Architecture and Design:

- Chip Architecture: Ampere GA100

- Transistor Count: 54.2 billion

- Lithography: 7nm TSMC

The A100 40GB leverages the advanced Ampere architecture, featuring a high transistor count and efficient 7nm manufacturing process. This design contributes to its superior computational power and energy efficiency.

- Nvidia A100 Models and Their Differences

Nvidia A100 GPUs are available in two main models with different memory capacities: 40GB and 80GB. These models have differences in features and applications, as detailed below:

1) Nvidia A100 40GB

- Memory: 40GB HBM2

- Memory Bandwidth: 1.6 TB/s

- CUDA Cores: 6,912

- Tensor Operations:

- INT8: 624 TOPS

- FP16: 312 TFLOPS

-TF32: 156 TFLOPS

Advantages:

- Lower Cost: Generally, the 40GB model is more cost-effective.

- Suitable for Medium Applications: For many use cases such as machine learning and large data processing, 40GB of memory may be sufficient.

- High Processing Power: Despite having less memory, the 40GB model still offers high memory bandwidth and computational power.

Disadvantages:

- Limited Memory Capacity: Compared to the 80GB model, the 40GB model may not be sufficient for very large and complex applications that require more memory.

- Lower Memory Bandwidth: The memory bandwidth of the 40GB model is not as high as the 80GB model, which can impact performance in data-intensive tasks.

2) Nvidia A100 80GB

- Memory: 80GB HBM2

- Memory Bandwidth: 2.0 TB/s

- CUDA Cores: 6,912

- Tensor Operations:

- INT8: 624 TOPS

- FP16: 312 TFLOPS

- TF32: 156 TFLOPS

Advantages:

- Increased Memory Capacity: With 80GB of memory, this model is suitable for larger and more complex applications and can handle more data simultaneously.

- Higher Memory Bandwidth: The 2.0 TB/s memory bandwidth improves data transfer speeds and enhances performance in heavy computational tasks.

- Better for Complex Processing: Ideal for large-scale scientific simulations, training very large models, and handling data-intensive tasks.

Disadvantages:

- Higher Cost: The 80GB model, due to its higher memory capacity and advanced performance, comes with a higher price tag.

- Increased Space Requirements: Due to its larger design and higher capacity, it may require additional cooling and physical space.

Conclusion

Choosing between the Nvidia A100 40GB and 80GB models depends on your specific needs. If you need high processing power with adequate memory for most applications and have a limited budget, the 40GB model might be sufficient. However, if you require handling very large and complex computations and have a higher budget, the 80GB model offers better performance.

When selecting the appropriate model, consider not only the memory capacity and bandwidth but also your specific requirements and compatibility with other system components and infrastructure.

- Nvidia A100 40GB SXM vs A100 40GB PCIe: A Detailed Comparison

Nvidia offers two main models of the A100 40GB GPU: SXM and PCIe. Both are designed for high-performance computing (HPC), AI workloads, and large-scale data centers, but they differ significantly in architecture, performance, and applications. Below is a comparison of the two models, highlighting their strengths, weaknesses, and ideal use cases.

- Nvidia A100 40GB SXM

- Architecture and Connection: The A100 SXM model uses the SXM4 architecture and connects directly to the motherboard, offering NVLink support. This enables higher bandwidth communication between GPUs.

- Memory Bandwidth: It features superior memory bandwidth, providing up to 1.6 TB/s.

- Power Consumption: This model consumes more power, with a 400W power requirement, making it ideal for advanced cooling environments.

- Use Cases: The A100 SXM is designed for demanding data center environments, HPC workloads, and AI research that requires massive parallel processing and ultra-high data throughput.

Advantages:

- Superior memory bandwidth (1.6 TB/s), thanks to NVLink.

- Optimal for multi-GPU setups requiring high communication speed between GPUs.

- Higher performance in large-scale AI and HPC projects.

Disadvantages:

- Higher cost.

- Requires more advanced cooling due to higher power consumption.

- Limited compatibility with standard servers; needs specialized SXM motherboards.

- Nvidia A100 40GB PCIe

- Architecture and Connection: The PCIe version uses the PCIe Gen 4.0 interface, which is widely supported across many servers and motherboards, making it easier to integrate into existing infrastructures.

- Memory Bandwidth: Slightly lower memory bandwidth of around 1.5 TB/s, due to the PCIe architecture.

- Power Consumption: Lower power consumption at 250W, reducing the need for complex cooling systems.

- Use Cases: The A100 PCIe model is more suitable for organizations that need high-performance GPU computing but lack the infrastructure for advanced cooling or multi-GPU systems.

Advantages:

- Broad compatibility with standard servers and systems.

- Lower power consumption, requiring less cooling.

- More affordable compared to the SXM model.

Disadvantages:

- Lower memory bandwidth compared to the SXM version.

- No NVLink support, which limits direct communication

between multiple GPUs.

- Slightly lower performance in complex, large-scale workloads.

Head-to-Head Comparison

- Performance: The SXM model outperforms the PCIe variant,

particularly in multi-GPU and parallel processing tasks, thanks to NVLink and higher bandwidth.

- Cost: The PCIe model is more cost-effective, making it ideal for organizations with budget constraints or less complex GPU computing needs.

- Flexibility: PCIe is more flexible in terms of server compatibility, while SXM is limited to specialized systems but offers higher performance in demanding workloads.

- Applications and Use Cases Nvidia A100 40GB

- Artificial Intelligence and Machine Learning:

- The A100 40GB is well-suited for training large-scale AI models and running complex machine learning algorithms. Its tensor cores accelerate matrix operations, enhancing both training speed and model accuracy.

- High-Performance Computing (HPC):

- Ideal for scientific simulations and computational research, the A100 40GB supports various HPC tasks, from climate modeling to molecular dynamics, by delivering robust performance in parallel computations.

- Data Analytics and Big Data:

- The GPU's memory bandwidth and capacity enable efficient handling of large datasets and complex queries. It facilitates faster data processing and analytics in large-scale data environments.

- Cloud Computing:

- The A100 40GB is frequently utilized in cloud environments, where it supports scalable compute resources for various applications, including virtual desktops and cloud-based AI services.

- Graphics and Visualization:

- Although primarily focused on computational tasks, the A100 40GB also supports high-performance graphics rendering, making it suitable for advanced visualization and simulation tasks.

- Compatibility and Integration with Nvidia A100 40GB

- Servers and Workstations:

- The A100 40GB is compatible with various server and workstation platforms designed for high-performance computing. Leading brands such as Dell EMC, HPE, and Lenovo offer solutions that integrate seamlessly with this GPU.

- Storage Solutions:

- To complement the A100 40GB, high-speed storage solutions like Dell EMC PowerStore and HPE Nimble can be used. These storage systems support rapid data access and processing, enhancing the overall performance of computing tasks.

- Interconnects and Networking:

- Effective interconnect and networking solutions, including NVLink, are crucial for maximizing the performance of multi-GPU setups and ensuring efficient data transfer.